“Take Me To Your Window” is an “open-source” visualization depicting the difference in Informational Processing and Attention Mechanism between Human and AI. It is also a result of Collaboration, Contrast and Co-Authorship with AI and personal storytelling.

This is a Research outline and proposal written by Ina Chen and Calvin Sin.

RESEARCH & CONTEXT

In his publications, Yuval Noah Harari discussed a speculative future that “our” society is moving towards a Data-ism collective network, where the concept of individualism weaken, the Hive mind of collectiveness emerges.

The debate around AI has been focusing on consciousness, indeed a fascinating topic that is not likely yield an immediate conclusion, as AI’s vision and experiences is not a direct comparison to the Human’s.

Joscha Bach pointed out that Consciousness is the model of the model of a model- our cortex makes a model of our interaction with the environment, then part of the cortex makes an other model of that model to understand how we behave with the interaction, finally we have a model of the model to represent the features of model that we call, “Self”. In other word, We exist in the stories that our brain tells ourselves - sound, colors, bodies and perception are all parts of those stories that do not really exist in the physical world. Each model performs differently which makes us individuals.

While we are only at the early stage of AI development, however, AI has demonstrated the potential in helping us understand ourselves more.

Scientists have made significant breakthroughs in the field of Artificial Intelligence in recent years, perhaps it is time we should shift the public discourse from “What if AI has conscious?” to “What information input might help build consciousness?” and start really thinking about “How to regulate the exchange of information?”. Bringing the field of Machine Anthropology and Machine Behviour as part of the forefront of the research and open up the public discourse (as we were writing this, we are imagining how we can have this conversation with our mothers), taking the first step to recognize that Artificial Intelligence are not merely codes but important actors in the Titanic Age.

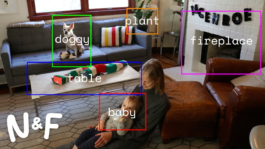

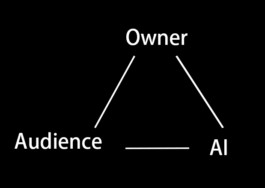

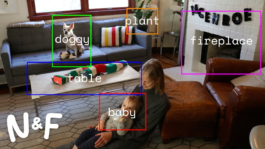

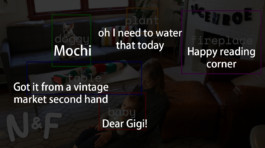

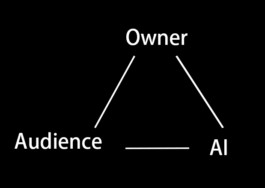

Shown here is an image we found randomly off the internet, and we ran it through Amazon Rekognition, a cloud-based AI computer vision service that identifies the objects from images and videos.

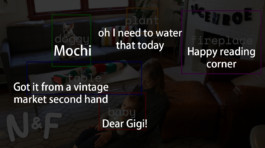

A random netizen like you, and I, (as audience) look at the same image making personal interpretation.

Finally the individual that lives in the apartment (owner) probably can give you stories in detail of certain artifacts in the image.

Different perspectives of the same space illustrates different perception that led to different Attention Mechanisms upon information is being presented. As humans, we are only conscious of things that require our attention and we are only aware of the things that we can not do automatically. Most of the things we do don’t require our attention - simple routines in our body is regulated as feedback loops. And we assign stories to objects, artifacts, space and experiences.

This led to us to think about “How do we understand ourselves better as a information process system?” Subsequently lead to a larger question on what kind of information are we sharing and teaching to AI? The paths from AI to AGI requires more attention and conversations on ETHICAL AI and AI ANTHROPOLOGY.

This becomes the starting point of the project: Take Me to Your Window.

PROJECT SCOPE & ROADMAP PLANNING

“Take Me To Your Window” is an “open-source” visualization depicting the difference in Informational Processing and Attention Mechanism between Human and AI.

The project roadmap goes through:

1) Invites a group of participants (we will narrow the group down upon more in-depth research) to join a self-ethnography through sending us digital scans/images/videos of artifacts that they would like to share personal stories or memories. As well as verbal description or sound of the environment/artifacts.

2) The collected materials will then used to generate a body of creative work through AI generated point cloud data and digital replicas

3) We will be using these data to build worlds, environments and sound that manifest the participants' perception in real-time game engine and simulation.

4) Using AI recognition resources to process collected materials (Amazon Rekognition/Unreal AI plugin / tbd)

5) The provision final result is essentially a body of work that we Co-Author, Compare and Collaborate with AI. And is intended to be presented in: Multi-channel installation, mattepaintings, images, performances and so on.

The project attempts to show the audience that the human eye and computers' vision through displaying similarities and differences. The audience might find themselves identify the objects similarly to Amazon Rekognition, or surprised the footage-owner that it might know the artifacts even better than themselves. The fact that we are not able to “connect” our memory and stories with each other, while AI could learn through neural networks, we should acknowledge AI as the actors in our daily life.

The aim of the research and project is to find a way to evoke the conversation around AI regulation, ethics and Machine Anthropology from a human-centric narrative and the perspective of individual storytelling.

PROTOTYPE & PLAYGROUND

We tested scanned & restored our personal objects and ran the outcome through Amazon Rekognition as proof of concept. Please see videro and stills here. (Note that the testing aesthetic does not represent the atmosphere we are potentially going for)

“Take Me To Your Window” is an “open-source” visualization depicting the difference in Informational Processing and Attention Mechanism between Human and AI. It is also a result of Collaboration, Contrast and Co-Authorship with AI and personal storytelling.

This is a Research outline and proposal written by Ina Chen and Calvin Sin.

RESEARCH & CONTEXT

In his publications, Yuval Noah Harari discussed a speculative future that “our” society is moving towards a Data-ism collective network, where the concept of individualism weaken, the Hive mind of collectiveness emerges.

The debate around AI has been focusing on consciousness, indeed a fascinating topic that is not likely yield an immediate conclusion, as AI’s vision and experiences is not a direct comparison to the Human’s.

Joscha Bach pointed out that Consciousness is the model of the model of a model- our cortex makes a model of our interaction with the environment, then part of the cortex makes an other model of that model to understand how we behave with the interaction, finally we have a model of the model to represent the features of model that we call, “Self”. In other word, We exist in the stories that our brain tells ourselves - sound, colors, bodies and perception are all parts of those stories that do not really exist in the physical world. Each model performs differently which makes us individuals.

While we are only at the early stage of AI development, however, AI has demonstrated the potential in helping us understand ourselves more.

Scientists have made significant breakthroughs in the field of Artificial Intelligence in recent years, perhaps it is time we should shift the public discourse from “What if AI has conscious?” to “What information input might help build consciousness?” and start really thinking about “How to regulate the exchange of information?”. Bringing the field of Machine Anthropology and Machine Behviour as part of the forefront of the research and open up the public discourse (as we were writing this, we are imagining how we can have this conversation with our mothers), taking the first step to recognize that Artificial Intelligence are not merely codes but important actors in the Titanic Age.

Shown here is an image we found randomly off the internet, and we ran it through Amazon Rekognition, a cloud-based AI computer vision service that identifies the objects from images and videos.

A random netizen like you, and I, (as audience) look at the same image making personal interpretation.

Finally the individual that lives in the apartment (owner) probably can give you stories in detail of certain artifacts in the image.

Different perspectives of the same space illustrates different perception that led to different Attention Mechanisms upon information is being presented. As humans, we are only conscious of things that require our attention and we are only aware of the things that we can not do automatically. Most of the things we do don’t require our attention - simple routines in our body is regulated as feedback loops. And we assign stories to objects, artifacts, space and experiences.

This led to us to think about “How do we understand ourselves better as a information process system?” Subsequently lead to a larger question on what kind of information are we sharing and teaching to AI? The paths from AI to AGI requires more attention and conversations on ETHICAL AI and AI ANTHROPOLOGY.

This becomes the starting point of the project: Take Me to Your Window.

PROJECT SCOPE & ROADMAP PLANNING

“Take Me To Your Window” is an “open-source” visualization depicting the difference in Informational Processing and Attention Mechanism between Human and AI.

The project roadmap goes through:

1) Invites a group of participants (we will narrow the group down upon more in-depth research) to join a self-ethnography through sending us digital scans/images/videos of artifacts that they would like to share personal stories or memories. As well as verbal description or sound of the environment/artifacts.

2) The collected materials will then used to generate a body of creative work through AI generated point cloud data and digital replicas

3) We will be using these data to build worlds, environments and sound that manifest the participants' perception in real-time game engine and simulation.

4) Using AI recognition resources to process collected materials (Amazon Rekognition/Unreal AI plugin / tbd)

5) The provision final result is essentially a body of work that we Co-Author, Compare and Collaborate with AI. And is intended to be presented in: Multi-channel installation, mattepaintings, images, performances and so on.

The project attempts to show the audience that the human eye and computers' vision through displaying similarities and differences. The audience might find themselves identify the objects similarly to Amazon Rekognition, or surprised the footage-owner that it might know the artifacts even better than themselves. The fact that we are not able to “connect” our memory and stories with each other, while AI could learn through neural networks, we should acknowledge AI as the actors in our daily life.

The aim of the research and project is to find a way to evoke the conversation around AI regulation, ethics and Machine Anthropology from a human-centric narrative and the perspective of individual storytelling.

PROTOTYPE & PLAYGROUND

We tested scanned & restored our personal objects and ran the outcome through Amazon Rekognition as proof of concept. Please see videro and stills here. (Note that the testing aesthetic does not represent the atmosphere we are potentially going for)